Roottrees, AI, and Perception

Outside of IFComp season, it’s rare that I explicitly assign a game a status of “will not finish” — this blog owes its very existence to my tendency to go for “will finish later” instead. It’s even rarer that I post about it here when I do. But here we are. My experience with The Roottrees are Dead has some peculiarities worth recording.

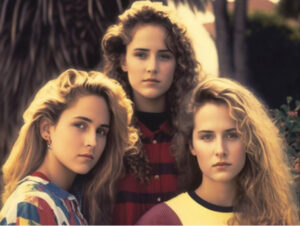

The Roottrees are Dead is a free online deduction game along the lines of Return of the Obra Dinn and The Case of the Golden Idol. The plot involves identifying heirs to a fortune by filling out an extensive family tree, using information largely obtained from in-game web searches. (The UI for this feels weird: rather than show you the individual search results, it narrates a summary of them, but it displays this narration on the in-game CRT as if it were the search engine output.) There’s a tutorial where you have to identify three sisters in a photograph before you get access to the full family tree, although even in the tutorial you have full access to the search engine, as well as a long list of names you can search for long before they become relevant.

The Roottrees are Dead is a free online deduction game along the lines of Return of the Obra Dinn and The Case of the Golden Idol. The plot involves identifying heirs to a fortune by filling out an extensive family tree, using information largely obtained from in-game web searches. (The UI for this feels weird: rather than show you the individual search results, it narrates a summary of them, but it displays this narration on the in-game CRT as if it were the search engine output.) There’s a tutorial where you have to identify three sisters in a photograph before you get access to the full family tree, although even in the tutorial you have full access to the search engine, as well as a long list of names you can search for long before they become relevant.

And that’s basically as far as I got. One of the three sisters was easy to identify, but the other two? I could not tell which was which from the information given, and even though there were only two possibilities, I was too proud to simply take a random guess so early in the game. Or perhaps not just proud; my experience with games of this sort is that guessing degrades the experience. It’s not just that it deprives you of the satisfaction of feeling clever at that moment, it’s also that each thing you puzzle out teaches you a little bit about how the author thinks and what kind of information is important. Skipping stuff without even an after-the-fact explanation of how you were supposed to have figured it out makes it harder to figure out more things later. And this was the tutorial, the part of the game that’s specifically meant to teach you, so skipping through it without learning what I was supposed to learn seemed potentially disastrous for what followed.

And so, when I finally gave up after an hour or two of diving down in-game web-search rabbit-holes and finding no new information about the sisters, rather than just guess, I sought hints. I was flabbergasted to learn that the intended solution involved noticing that the sister in the back is wearing a plaid shirt. I had seen the mention of plaid in the web-search text about the sisters, but had rejected it as relevant to the photo because obviously no one in it was wearing plaid; the shirt that I was now being told is plaid was clearly a shirt with red-and-black horizontal stripes. I took a closer look at the picture — maybe it was really plaid, and just looked like stripes to me? No, scrutiny just confirmed my initial impression.

Now, here’s the weird part. I’ve talked about this with several other people online, on the game’s official Discord channel and elsewhere. Every single person who weighed in on the matter had simply perceived the shirt as plaid. Some conceded that it’s actually striped if you look more closely, but they didn’t notice this at first glance. I didn’t perceive it as plaid at first glance — I still can’t perceive it as plaid no matter how hard I try. But apparently I’m the only one like this! This game has been out for three months, and this is the very first photo in it — heck, it’s on the game’s title screen! — and you have to inspect it closely for details figure out who the third sister is, and yet I find no trace of anyone else online having my problems. I suppose some people might have had problems, and guessed, and got on with it. But additionally the game has more than 20 credited beta testers, all of whom apparently signed off on the photo. The author himself appears to have seen nothing wrong with it. Wait, how? I can understand, at least in theory, how you could look at a picture of a striped shirt and not notice that it isn’t plaid, but how do you make a picture without noticing what you’re making?

The answer is that you use AI. I think we all understand by now that if you tell a neural net to give you a picture of a woman in a plaid shirt, it’s not at all surprising if it gives you a striped shirt instead. All the author had to do is not notice that it isn’t plaid, just like nearly all the players. This, it seems to me, is a big problem with AI generation: that it lets you produce art without any human mind paying a whole lot of attention to it. That might be acceptable in some situations (although it sometimes results in people with more arms than intended, and there are very few situations where that’s acceptable), but in a sleuthing game, it’s potentially deadly. I’m told that AI generation was just the first step in this game, that a lot of the pictures were retouched or had details added by hand for the sake of the puzzles. So the generation of these pictures did involve a human element beyond a casual glance, at least some of the time. Surely the very first picture in the game, the one that’s making a first impression, would be worth such care? But again, I have to remind myself that this is apparently a Just Me problem.

But speaking as Just Me, that first impression has, I think, already done irreparable damage. A game of this kind needs the player to trust it to deliver the necessary information clearly and accurately, and that trust has been broken. If I play further, I’ll always be wondering if I’m really seeing what the author intended. If it had happened later in the game, I might have decided to just keep going anyway, just to get to the end. But since it happened before I even got into the game proper, I feel free to just let it go.

Comments(3)

Comments(3)